Round 3: Data Invulnerability vs. Epic Data Indestructability

Ok, so the term “Epic Data Indestructability” doesn’t actually exist, but I needed something for a section title and CommVault doesn’t have clever, made-up marketing term like “Data Invulnerability” to describe the resiliency built into the Simpana platform. The availability of protected data should be a primary concern of anyone developing a data protection architecture. This is true in both ability to recover and time to recovery. After all, what good is a backup if you can’t perform a restore.

If you’ve spoken with an EMC/Data Domain sales rep, no doubt you’ve heard about all the goodness that goes into their Invulnerability Architecture. Some of the architecture points are quite nice, although some are common among many disk vendors and, in my opinion, some are over-emphasized. In a Simpana environment, the biggest competitor for a Data Domain appliance would be more mainstream enterprise disk such as an EMC CX, IBM XIV, NetApp FAS and others. When we compare the data protection and corruption prevention capabilities of the Data Domain appliance, we must also keep in mind the reliability of these kinds of arrays.

Verification and Disk Scrubbing

The first key point Data Domain makes is around End-to-End Verification. Data written to the Data Domain is checksum’d, written to disk and then re-read immediately and compared against the checksum to ensure the integrity of the written data. This sounds like a great feature, and it is true that no other vendor, at least that I know of, does this same type of check during the write operation. But is it worth it? Most disk systems, including Data Domain as well, already create and store checksums for data as it is written. During an application read operation, the data is read and a new checksum is calculated. That checksum is then compared with the existing checksum. If they are identical, the read operation proceeds successfully, if they do not match, corruption is assumed and the disk block is recreated from disk parity. So, silent data loss or lost writes scenarios are already covered with most typical storage systems. The real additional benefit for Data Domain to check this data immediately would be to protect against corruption that originates within the appliance during the write that RAID parity can’t correct. Such as, perhaps, a filesystem error. Domain appliances additionally use disk scrubbing, a scanning technique to regularly read and compare data with its stored checksum to proactively identify silent data corruption. A nice feature, but also common in storage arrays such as NetApp, XIV and others. These features are why most enterprise storage arrays are already so reliable. In my 5 years as a consultant at NetApp, I never once saw a NetApp array return corrupt data, unless corrupt data was written to it—which is a possibility we should consider.

The first key point Data Domain makes is around End-to-End Verification. Data written to the Data Domain is checksum’d, written to disk and then re-read immediately and compared against the checksum to ensure the integrity of the written data. This sounds like a great feature, and it is true that no other vendor, at least that I know of, does this same type of check during the write operation. But is it worth it? Most disk systems, including Data Domain as well, already create and store checksums for data as it is written. During an application read operation, the data is read and a new checksum is calculated. That checksum is then compared with the existing checksum. If they are identical, the read operation proceeds successfully, if they do not match, corruption is assumed and the disk block is recreated from disk parity. So, silent data loss or lost writes scenarios are already covered with most typical storage systems. The real additional benefit for Data Domain to check this data immediately would be to protect against corruption that originates within the appliance during the write that RAID parity can’t correct. Such as, perhaps, a filesystem error. Domain appliances additionally use disk scrubbing, a scanning technique to regularly read and compare data with its stored checksum to proactively identify silent data corruption. A nice feature, but also common in storage arrays such as NetApp, XIV and others. These features are why most enterprise storage arrays are already so reliable. In my 5 years as a consultant at NetApp, I never once saw a NetApp array return corrupt data, unless corrupt data was written to it—which is a possibility we should consider.

EMC cautions that the invulnerability architecture of Data Domain can only ensure the consistency of data as it was received. If network or application errors upstream cause corruption before the data is received by the Data Domain appliance, the appliance will happily write that corrupt data to disk, preserve it, and restore it back in the same, corrupt, format at a later date. In order to guarantee data is perfectly unchanged, it must be verified against the data as it existed before it left the client server. This is the only way to ensure true end-to-end validity of the backup data—short of actually restoring it. I’ll explain how Simpana does this later in this post.

With strong data integrity assurances already existing in most storage arrays and Data Domain lacking the ability to perform end-to-end verification of data as it existed on the client, perhaps there is more marketing to be found in the Invulnerability Architecture than substance.

Raid 6

I believe the most defining feature to the Invulnerability Architecture is extra protection afforded through Raid 6. As a NetApp consultant nearly every customer I spoke with used RAID-DP, which is NetApp’s implementation of RAID 6, and I can’t recall a single incident where 3 individual drives failed and compromised a raidgroup. I did, however, see multiple double-disk failures. The real problem with RAID 6 implementations—with the exception of RAID-DP—is the performance hit on write operations. One of the great benefits of deduplication, however, is its ability to greatly reduce the I/O needed for disk write operations by eliminating the writing of duplicate data. Deduplicated restores, on-the-other-hand, can use all the disk read performance it can get and RAID 6 has very little read performance overhead. These items make RAID 6 a good fit as a deduplicated backup target.

Once again, though, this capability is not unique to Data Domain. Most storage vendors now also support RAID 6.

Simpana and “Epic Data Indestructability”

Let’s now look into some of the capabilities built into Simpana that help ensure data availability in addition to what hardware alone can provide.

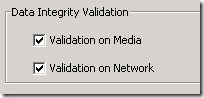

Built into Simpana is a very powerful data integrity process to ensure the immutability of client data. When enabled, as backup objects are transmitted from the source client, they are “integrity tagged” with CRC information. When data is received by the MediaAgent, a new tag is generated before the data is written and compared with the original, if they match the data is written to disk. If they do not match, the job will move to a pending state and the operator will be made aware of the condition. The job can then be resumed after the disruption is resolved. For even greater protection, these tags can be stored on the media with the backup to ensure the data remains unchanged during any restore/retrieve/copy or proactive verification operation.

Built into Simpana is a very powerful data integrity process to ensure the immutability of client data. When enabled, as backup objects are transmitted from the source client, they are “integrity tagged” with CRC information. When data is received by the MediaAgent, a new tag is generated before the data is written and compared with the original, if they match the data is written to disk. If they do not match, the job will move to a pending state and the operator will be made aware of the condition. The job can then be resumed after the disruption is resolved. For even greater protection, these tags can be stored on the media with the backup to ensure the data remains unchanged during any restore/retrieve/copy or proactive verification operation.

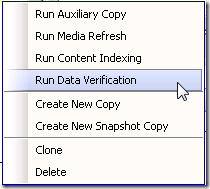

With that said, no business should ever rely on a single device to store all backup data—verified or not. This is why it is easy to create additional data copies within the Simpana platform to protect against typical storage array failure and Data Domain failure alike—not to mention disasters. Copies can be between disk and tape, disk and disk, disk or cloud or a variety of other combinations to suit your specific needs and SLAs. Integrity tags stored on media are also verified during aux copy operations. This is an excellent feature, since a daily aux copy—which nearly every customer already does—can double as a verification operation; once again ensuring the data being read from media is identical to the data as it existed before it left the client.

With that said, no business should ever rely on a single device to store all backup data—verified or not. This is why it is easy to create additional data copies within the Simpana platform to protect against typical storage array failure and Data Domain failure alike—not to mention disasters. Copies can be between disk and tape, disk and disk, disk or cloud or a variety of other combinations to suit your specific needs and SLAs. Integrity tags stored on media are also verified during aux copy operations. This is an excellent feature, since a daily aux copy—which nearly every customer already does—can double as a verification operation; once again ensuring the data being read from media is identical to the data as it existed before it left the client.

Simpana also uses a self protected indexing model (commonly called “split-indexing”) which ensures a copy of each backup’s index is stored on media, along with the backup. This allows for recovery from media even in the even to total, catastrophic loss of the central catalogue and all backup infrastructure. As long as the media survives, it can be recovered.

In addition to metadata protection, one of the most important design aspects of Simpana’s deduplication architecture is the separation of the deduplication check-in process from the restore process. While new backup data is hashed and efficiently compared against previous hashes in the deduplication database, restores occur directly from disk without requiring any interaction or rehydration from the deduplication database. This means even deduped restores can be performed quickly and efficiently and, more importantly, can still be performed even during catastrophic loss of the deduplication database. To minimize impact of backup operations and to prevent a re-baseline of backup data from being required in the event of corruption or loss of this database, the database is auto-protected and logged. In the event the database becomes lost or corrupt, Simpana would automatically move all current and new backup jobs into a pending state, restore the database, replay the logs and then resume backups from where they left off.

Round Results:

The question of this round is not about whether Data Domain is reliable, but whether it is required. Data Domain is a solid platform and the negative feedback I hear is mostly around price, not technology. EMC is in a tough position to recommend storage arrays such as the CX or VMAX to run the most mission critical applications requiring the highest availability and data integrity for primary storage, but then also suggest those arrays are flawed in some way which leaves them susceptible to a variety of data loss scenarios in the backup world that only a Data Domain can protect against. It is here that we begin to see the fine line between marketing and insanity.

The data integrity and resiliency features of Simpana alone allow for outstanding recoverability while ensuring the immutability of the data it is protecting, storing and retrieving—regardless of the target media used. These capabilities are built into the platform, with no additional licensing required, and available for all forms of media.

I’ll finish up this series in my next post to discuss some of the long term benefits or consequences of each deduplication approach as I see it. I expect some of the strongest considerations to be found there.

Dale Carnegie: How To Win Friends and Influence People

Dale Carnegie: How To Win Friends and Influence People Dave Ramsey: The Total Money Makeover: A Proven Plan for Financial Fitness

Dave Ramsey: The Total Money Makeover: A Proven Plan for Financial Fitness