In this part of the Storage Virtualization Showdown series, I’ll be focusing on Storage Efficiency. A successful storage virtualization implementation will not only enable the management and simplified movement of storage between external arrays, but also provide a robust set of capabilities that actually maximize the utilization of the back-end equipment.

I’ll be breaking down Storage Efficiency into the below sections and discussing how each product performs at each capability.

- Storage Pooling

- Thin Provisioning

- Tiering and Auto-Tiering

- Deduplication

- VMware Integration (VAAI Support)

Storage Efficiency can make or break the financial case for an architecture, so pay careful consideration to this section in particular when considering your options.

EMC VPLEX

To forewarn you, this section will no-doubt be VPLEX’s weakest showing. The stated direction for VPLEX is to leverage backend array capabilities for storage efficiency while leveraging “Storage Federation” capabilities using the VPLEX. While this is functionally possible, the management overhead of managing storage at both the back-end and VPLEX levels will no doubt be cumbersome for most customers. Additionally, migrating data from one back-end vendor to another requires the admin to fully learn the new back-end vendor’s toolkit instead of leveraging those capabilities heterogeneously at the storage virtualization layer.

Storage Pooling

While external storage can be combined and LUNs distributed across any Storage Volumes in the VPLEX, there is really no management concept of pooling. Whereas with competing products, customers issue a command to create a LUN out of a pre-defined pool of storage and let the system spread the device across dozens—if not—hundreds of storage volumes automatically, device provisioning in a VPLEX is a much more manual task. In addition, it is considered best practice to create a 1:1 mapping between external LUNs and VPLEX virtual volumes in most cases, otherwise you may not be able to leverage external array capabilities for snapshots, clones or replication as easily. For these reasons, you would be safe to assume you will get little benefit from VPLEX in terms of storage pooling.

Thin Provisioning

This capability is also left up to the external storage array, leaving administrators with multiple management points to analyze real and virtual storage consumption. It is worth noting the VPLEX does have a “Thin Attribute” configuration option when creating devices to ensure the VPLEX doesn’t do naughty things to your thinly provisioned external storage.

Tiering and Auto-Tiering

While EMC does have some nice capabilities in terms of Tiering and Auto-Tiering in other products—you won’t find them in VPLEX—they must be leveraged through external arrays.

Deduplication

“These aren’t the droids you are looking for.” See: External Arrays

VMware Integration (VAAI)

Understandably, EMC does have some tight integration with VMware and it is quite present in the EMC Virtual Storage Integrator, a plugin for vSphere that provides visibility on the VPLEX and other EMC storage arrays. If you require VMware HA across datacenters within metro distances, this is where VPLEX really shines. Distributed Devices can be created that behave as active/active VMware Datastores. VMware HA and DRS can be configured to seamlessly failover Virtual Machines across datacenters during host failures or planned maintenance. As for specific VAAI capabilities, such as Hardware Locking, Hardware Copying and Hardware Zeroing, these don’t appear to be supported by VPLEX yet.

HDS VSP

Like most other competitors, VSP packs a suite of storage efficiency technologies right into the storage virtualization layer. As previously stated, this reduces management overhead and ensures migrating back-end storage from vendor to vendor doesn’t require all new training. The VSP also supports internal storage which gives customers more flexibility for expansion.

Storage Pooling

VSP features Hitachi Dynamic Provisioning (HDP) which allows external LUNs to be combined into pools of storage. During provisioning administrators select the desired size of the LUN size (at 42MB increments) and the appropriate number of 42MB pages are semi-round-robin allocated from all disks in the pool. This provides a few nice benefits. First, by wide striping LUN contents across may, if not all, back-end disks, performance for the entire pool is nearly perfectly balanced and can be monitored and enhanced from an aggregate pool performance perspective—rather than at an individual device perspective. Another nice feature of the VSP is the ability to automatically rebalance load across external LUNs as new capacity is added to the pool. Additionally, pooled capacity eliminates fragmented pockets of storage that are common in non-pooled storage environments and allows administrators and managers to perform planning and trending by reporting on the aggregate pool capacity instead performing the complex calculations of individual devices, minus unusable space pockets.

Thin Provisioning

Hitachi Dynamic Provisioning pools allow thin provisioning at the same 42MB page granularity. Zero Page Reclaim also enables previously created "thick” volumes to be converted “thin” with less effort. The page size is a bit large when compared to the more fine-grained thin provisioning granularities of some of the competition. For some filesystems such as NTFS, VxFS and even ZFS, this does not reduce space savings substantially. However some filesystems, such as ext3 which write metadata at regular intervals (128MB) throughout the filesystem during creation time can consume 30% of the total volume capacity up front. UFS, which writes at 52MB intervals will consume nearly the entire volume. While space savings may not be as great as with the competitors finer granularity, the VSP does have an edge in performance. A larger page size reduces the total amount of Dynamic Provisioning metadata to be stored and processed, which results in far less metadata processing. This, combined with a dedicated control cache that sits just off the VSD CPUs ensures thin provisioning can be achieved and scaled with negligible performance overhead.

Tiering and Auto-Tiering

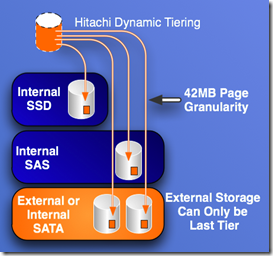

Hitachi Dynamic Tiering (HDT) enables the use of multiple tiers within a single pool and moves “hot” or busy pages of sub-LUN data between the tiers based of the results of automated array monitoring and performance modeling. HDT currently supports up to 3 tiers (SSD, SAS, SATA/External). Currently, external LUNs are always treated as the lowest tier, which makes tiering with only external LUNs impossible. To leverage 2 or 3 tiers, both SSD and SAS drives must be internal to the VSP or HDT will not properly move busy pages. I consider this a significant limitation to tiering capabilities as a virtualization-only platform. The flip-side, however, is that most customers may desire to move their higher-performance disks as close to the application as possible, so placing SSDs and high-performance SAS disks inside the VSP enclosure may seem like a logical approach.

(SSD, SAS, SATA/External). Currently, external LUNs are always treated as the lowest tier, which makes tiering with only external LUNs impossible. To leverage 2 or 3 tiers, both SSD and SAS drives must be internal to the VSP or HDT will not properly move busy pages. I consider this a significant limitation to tiering capabilities as a virtualization-only platform. The flip-side, however, is that most customers may desire to move their higher-performance disks as close to the application as possible, so placing SSDs and high-performance SAS disks inside the VSP enclosure may seem like a logical approach.

Deduplication

Primary storage deduplication is still a rare capability and like most of the competition, VSP does not yet offer it, but could potentially leverage it on external arrays.

VMware Integration (VAAI)

VAAI or vStorage APIs for Array Integration enables more efficient use of host virtualization resources by offloading common storage tasks to the storage arrays. VAAI includes 3 primitives on vSphere 4.1 and 5 in vSphere 5. In vSphere 4.1 VSP supports all 3 primitives; Hardware Locking, to offload SCSI reservation locking from the vSphere hosts to a vSphere Atomic Test and Set (ATS) facility leveraged through the storage array—which can reduce storage contention as VM density increases within larger datastores; Hardware Zeroing, which eliminates time consuming eager-zeroed thick processing by allowing the array to return the zeroing operation immediately; and Hardware Copy, which allows the array to perform VM copy operations without requiring the I/O to go through an ESX host, significantly decreasing cloning times. For vSphere 5, VSP supports the newly added Thin Provisioning primitives which allows the VSP to thin reclaim storage previously allocated to a datastore as VMs are moved or deleted. As of the time of this writing, VSP is the only platform in this comparison that supports this feature.

IBM SVC

The SVC shares many of the same storage efficiency capabilities as the VSP, but implements some of them in a slightly different way. By implementing storage virtualization capabilities within the SVC, customers can also expect to management overhead and training costs as back-end vendors change. The SVC does support internal SSD drives, however there are some caveats that make this implementation less attractive than other vendors.

Storage Pooling

SVC clusters pool external storage and allow LUN extents to be semi-round-robin provisioned throughout the pool. During pool creation, an extent size is determined. The extent size directly correlates to the total amount of storage that can be managed behind a single cluster. For example, a 16MB extent size limits the cluster capacity to 64TB, while a larger 8GB extent size allows the cluster to grow to 32PB. This is due to the management overhead associated with the number of extents within a cluster. Documented best practice is between 256MB or 512MB which allows for between 1-2PB of total capacity, although my customers have gone larger. Similar to VSP, provisioning tasks are simplified by enabling administrators to provision LUNs out of a pool instead of manually defining mappings. The SVC also benefits from wide-striping performance capabilities—although this could be slightly less balanced if you are using multi-GB extent sizes—and the benefit of managing storage capacity as a pool instead of a device by device basis to reduce waste and simplify trending is also nice. SVC does not automatically rebalance extents as new mdisks are added, however my customers have created scripts to perform this task almost as easily.

Thin Provisioning

IBM does support Thin Provisioning on the SVC platform and allows customers to specify their own granularity. This is done through selecting a Grain Size between 32KB and 256KB during volume creation. This give SVC an efficiency advantage over VSP on those more difficult filesystems (UFS, Ext3) that spread metadata across the volume. Customers can expect greater thin savings on these filesystems. However, this comes at a price. Unlike VSP, which stores thin metadata on SDRAM, SVC stores this metadata within the volume itself. As this metadata is accessed, it generates additional load on the back-end storage. For random workloads of 70% reads, a thin volume can generate one additional I/O for every user I/O—this can be as much as a 50% performance hit. Thin volumes also consume more CPU cycles, which can also impact performance for the entire I/O Group. The smaller the Grain Size, the greater the metadata and more noticeable the performance overhead. Best practice would be to use the largest Grain Size possible for optimal performance and avoid Thin Provisioning on volumes which have heavy I/O demands.

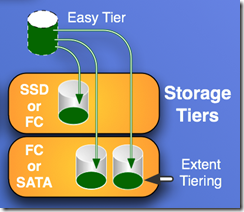

Tiering and Auto-Tiering

IBM Easy Tier enables automated extent-level tiering based on I/O utilization monitoring over a 24hr period. Easy Tier supports 2 tiers within a pool as opposed to VSP’s 3 tier support. However, the SVC supports all tier types on external arrays, whereas the VSP forces all but the last tier to be inside the VSP. Because SVC performs sub-lun tiering at an extent level, consideration must be made when determining an extent size. While larger extents allow for greater maximum cluster capacities, they also incur a thick-er movement of data between tiers. For example, moving several large 8GB extents of data between tiers as opposed to several 512MB extents can have a dramatic impact on back-end tiering I/O as well as waste precious SSD storage if not all of the 8GB of data is considered “hot”. SVCs do support SSDs within the nodes, however there are significant limitations to this approach. First, SSDs within a node cannot be shared by any other node—creating pockets of silo-ed SSDs. Second, data on SSDs within a node is unavailable if that node fails without the data being mirrored elsewhere. Because of this, best practice is to mirror data on internal SSDs between nodes within an I/O Group, which does nothing to help with the already expense price of SSDs. The good news is that SVC allows external array SSDs to be configured as “generic_ssd” and used for the same heavy I/O while allowing them to be shared across the cluster without requiring the overhead of mirroring.

determining an extent size. While larger extents allow for greater maximum cluster capacities, they also incur a thick-er movement of data between tiers. For example, moving several large 8GB extents of data between tiers as opposed to several 512MB extents can have a dramatic impact on back-end tiering I/O as well as waste precious SSD storage if not all of the 8GB of data is considered “hot”. SVCs do support SSDs within the nodes, however there are significant limitations to this approach. First, SSDs within a node cannot be shared by any other node—creating pockets of silo-ed SSDs. Second, data on SSDs within a node is unavailable if that node fails without the data being mirrored elsewhere. Because of this, best practice is to mirror data on internal SSDs between nodes within an I/O Group, which does nothing to help with the already expense price of SSDs. The good news is that SVC allows external array SSDs to be configured as “generic_ssd” and used for the same heavy I/O while allowing them to be shared across the cluster without requiring the overhead of mirroring.

Deduplication

No primary deduplication support yet in the SVC. But, it could be leverage by external arrays.

VMware Integration (VAAI)

IBM supports the 3 primitives for vSphere 4.1 on the SVC, which include Hardware Zeroing, Hardware Copy and Hardware Assisted Locking. No support as of yet for vSphere 5 and the Thin Provisioning primitives.

NetApp V-Series

NetApp probably has the greatest number of storage efficiency capabilities wrapped into one platform which makes it a tough competitor in terms of storage efficiency in the FAS Series space and allows the V-Series line to centralize those same capabilities as a virtualization platform. Like the regular FAS arrays, NetApp also supports internal or (native) storage within the V-Series for additional growth flexibility.

Storage Pooling

V-Series systems pool external LUNs into groups called Aggregates. Aggregates can be 50-100TB in useable size—depending on the V-Series model number. A key differentiator between NetApp and other vendors is that NetApp has a dynamic virtualization mapping layer. Whereas with other solutions, extents or pages are logically to physically mapped during the first write and don’t change (except during re-tiering), NetApp continuously updates the location of new write or changed write I/O is processed. Of course, this is based on WAFL—the cornerstone of NetApp virtualization since its inception. The main benefit of dynamic virtualization is the ability for NetApp systems to combine large quantities of highly random write I/O—which would normally thrash HDD heads—into sequential streams that minimize head movement. No other storage system can do this on the fly. NetApp allocation and wide-striping is done at a 4KB block level, which makes it the most granular of all platforms. The Aggregate size limitations do somewhat silo storage managed between a NetApp, but the Data Motion capability can help overcome this. The biggest consideration with NetApp—and it is a big one—is the overhead required on the V-Series platforms. In a FAS series storage system, NetApp requires 10.5% capacity overhead for the WAFL dynamic virtualization layer. This is mitigated largely by NetApp’s ability to utilize high-performance RAID DP(6) in larger RAID groups than typical to overcome that 10% overhead. However, in a V-Series system, customers are forced to use the back-end vendors RAID which will most likely not have larger RAID group sizes. Additionally, V-Series systems require an additional 12.5% overhead on the external LUNs to perform block checksums. This, combined with the WAFL overhead consumes over 21% of the back-end storage capacity right out of the gate. Compared to the competition, NetApp is already –21% efficiency before the start. NetApp’s challenge is to make up for this through the robust storage virtualization capabilities, some of which are unique to NetApp.

Thin Provisioning

NetApp Thin Provisioning is really built into the platform. The same dynamic virtualization the enables mapping of write I/Os on demand also enables Thin Provisioning. In fact, the I/O behavior on NetApp systems configured for Thin Provisioning and Thick Provisioned systems are identical. Thick provisioned systems just enforce capacity guarantees that Thin Provisioning does not. Moving from thick-to-thin and back on NetApp is instantaneous and requires no data movement—just a simple volume or LUN property change. This combined with the greatest granularity of allocations (4KB), really gives NetApp the edge in both space savings and performance. However, it is unlikely that additional thin provisioning efficiencies provided by NetApp are enough to overcome the 21% deficit alone.

Tiering and Auto-Tiering

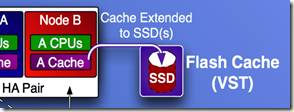

NetApp marketing positions a NetApp capability called Virtual Storage Tiering as the NetApp tiering solution. I actually dislike this term and feel NetApp missed out on a opportunity to differentiate themselves as having a unique approach and instead tried to follow the tiering marketing herd, in doing so, caused more confusion than good. Contrary to the marketing, NetApp doesn’t do automated tiering, instead they allow SSD PCI-E cards to be inserted into the controller heads and used as a 2nd cache tier. As new I/Os are accessed, they are first populated into the controller’s SDRAM and, once reaching cache expiration, move into the SSD cache for much longer storage before being evicted entirely. As you can see, this is more of a caching innovation than a storage tiering solution. The genius of this solution, however, is in its simplicity. There are no tiering algorithms, monitoring cycles or back-end movements of data, instead, as data is accessed it is populated in cache and then the SSD cache, in a way that provides the same benefits that tried-and-true storage caching has for years, just on a much larger and more economical scale. There are some considerations, however. This is read-only, so customers cannot write to the SSD cache. This isn’t as big of a deal as it seems though, for a few reasons. First, SSD write performance doesn’t provide nearly the IOP rate as it does for reads, so using SSDs for read only traffic is very efficient in terms of IOPs. Second, as I’ve already mentioned, NetApp systems combine sets of fully random writes into sequential streams that can write to disk orders of magnitude faster than the competition. For this reason, NetApp caches writes in a small SDRAM write cache and de-stages it to disk typically much faster than they accumulate. The other large concern with it being both read only and located within the controller is that during failovers, the caching benefit is halved and during fail-backs, the cache has to re-warm, missing on most cache read attempts, until filling back up.

they are first populated into the controller’s SDRAM and, once reaching cache expiration, move into the SSD cache for much longer storage before being evicted entirely. As you can see, this is more of a caching innovation than a storage tiering solution. The genius of this solution, however, is in its simplicity. There are no tiering algorithms, monitoring cycles or back-end movements of data, instead, as data is accessed it is populated in cache and then the SSD cache, in a way that provides the same benefits that tried-and-true storage caching has for years, just on a much larger and more economical scale. There are some considerations, however. This is read-only, so customers cannot write to the SSD cache. This isn’t as big of a deal as it seems though, for a few reasons. First, SSD write performance doesn’t provide nearly the IOP rate as it does for reads, so using SSDs for read only traffic is very efficient in terms of IOPs. Second, as I’ve already mentioned, NetApp systems combine sets of fully random writes into sequential streams that can write to disk orders of magnitude faster than the competition. For this reason, NetApp caches writes in a small SDRAM write cache and de-stages it to disk typically much faster than they accumulate. The other large concern with it being both read only and located within the controller is that during failovers, the caching benefit is halved and during fail-backs, the cache has to re-warm, missing on most cache read attempts, until filling back up.

Deduplication

Finally, we have a winner! NetApp is the only storage virtualization platform to provide primary storage deduplication. When deduplication is enabled, NetApp collects fingerprints of blocks as new writes are allocated. It saves these fingerprints and searches them for duplicates within a fingerprint database on a regular basis—usually nightly. During the run, any fingerprint matches are compared block-by-block to ensure they are exact matches before the duplicate block is replaced with a pointer to the already existing block. This capability, one again based on WAFL, is efficient in terms of capacity and performance. Most customers who leverage NetApp dedup will see no performance degradation and many will actually see performance improve. This is because the same deduplicated blocks on disk are also deduplicated when in cache—increasing the efficiency of cache, especially in VDI type workloads. Deduplicated NetApp volumes are limited to 16TB in total size and deduplication will be limited to only the objects within each volume (remember a NetApp volume can contain many LUNs). The other thing to be aware of is actual deduplication savings varies depending on data types. I’ve seen customers using NetApp Thin Provisioning and Deduplication achieve 90%+ capacity savings in VMware environments. I’ve also seen deduplication in the single digits for databases such as Oracle and in the 20% range for Exchange with file shares being in the 40-70% range—but your rates will vary. Remember to keep in mind that NetApp has a 21% overhead from the start, so be sure the additional efficiencies in Thin Provisioning and Deduplication are enough to offset that investment or that you favor other aspects of the platform well enough to compensate for the difference.

VMware Integration (VAAI)

NetApp also supports the 3 primitives for vSphere 4.1 on the V-Series, which include Hardware Zeroing, Hardware Copy and Hardware Assisted Locking. No support as of yet for vSphere 5 and the Thin Provisioning primitives.

Next Up

Congratulations if you’ve made it this far! Whew, his was a long post full of content. Once again, feel free to share your perspective or set the record straight. In the next post of this series, I’ll focus on Data Protection capabilities such as Snapshots and Mirroring.

Dale Carnegie: How To Win Friends and Influence People

Dale Carnegie: How To Win Friends and Influence People Dave Ramsey: The Total Money Makeover: A Proven Plan for Financial Fitness

Dave Ramsey: The Total Money Makeover: A Proven Plan for Financial Fitness

I did spend a lot of time trying to confirm 10.5% space overhead for WAFL dynamice virtualization layer, but did not have luck. Could you please share source for this? Also, for block sum loss for external LUNs.

The amount of lost space is huge if you want to virtualize arrays behind v-series.

Thank you

Tarik,

The best place to find this information is in the V-Series Planning guide. Below is a direct link to the NetApp NOW site article. You may need a NOW login to see the data.

One thing I didn’t mention on V-Series, is that NetApp offers an option called Zone Checksums. Zone Checksums don’t consume any additional capacity so WAFL will only consume the 10.5%. But, they are not quite as fast as Block Checksums. Most V-Series implementations I saw used Block Checksums unless they had lower performance requirements.

https://library.netapp.com/ecmdocs/ECMM1278375/html/install/lunset6.htm