In the final part of this Storage Virtualization Series, I’ll be discussing the High Availability (HA) capabilities of each of the products. This section will be most important to customers who prize maximum uptime above all other considerations—even storage efficiency.

This post will be broken down into several key HA areas.

- Redundancy: Can the product sustain a controller or component failure? What about cascading failures?

- Performance: What sort of I/O degradation can be expected during component failures?

- Non-Disruptive Software Upgrades: What type of impact can a customer expect during code upgrades?

- Non-Disruptive Hardware Upgrades: As the hardware is perpetually upgraded, what sort of impact can the customer expect?

IBM SVC

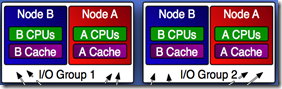

Redundancy: Although SVC nodes group together to share back-end disks as part of a larger cluster, HA domains are limited to pairs of nodes call I/O Groups. Write caching is protected by mirroring the cache between both nodes of the I/O Group. During node failures, FC paths will be disabled to the downed node and host MPIO software will failover I/O to the surviving node paths. This failover behavior is very similar to mid-range, modular arrays. During sustained I/O Group failures, volumes can be manually represented through surviving I/O Group pairs, if necessary. During power failures, SVCs offload write-cache contents to an internal hard drive for protection.

Performance: During node failures, the CPU and cache resources of the failed node will be unavailable to the I/O Group which will reduce achievable performance in that I/O Group by 50%. Additionally, during node failures, nodes are no longer able to mirror write cache and so they switch to write-through caching mode. This increases latency and could cause noticeable performance impact, depending on the available cache in the back-end storage and length of burst write I/O.

Non-Disruptive Software Upgrades: SVC supports fully non-disruptive code upgrades. Like most mid-range arrays, this is accomplished by loading the code and rebooting each of the SVC nodes in and I/O Group individually. As during node failures, this process limits available I/O to 50% and disables write caching, so it is best to schedule these for a non-peak load time.

Non-Disruptive Hardware Upgrades: This is the area where SVC really is ahead of the pack. Since SVC nodes are constructed of commodity x86 hardware and the magic of virtualization occurs in software, node upgrades are relatively easy. During generational hardware upgrades, a node within an I/O group is failed over and the legacy hardware removed. The new hardware replaces the old and assumes its identity (WWPNs) when powered up. No data migration is required and this process, just like a SW upgrade, can be performed completely non-disruptive to hosts. SVC is the only platform that has documented support for this capability and a long track record of existing customers who have successfully performed the upgrades.

NetApp V-Series

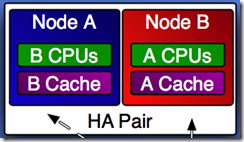

Redundancy: NetApp performs HA very similar to SVC. 2 Nodes in an HA pair, each providing failover capability to the other. V-Series controllers however mirror write cache locally, to a battery-backed NVRAM controller, before also replicating it to the partner node’s NVRAM. During node failures, host MPIO software will switch paths as with SVC. During power failures, NVRAM protects the contents of the write-cache.

Performance: During node failures, NetApp is also limited to 50% of max I/O since all workload is now running on the surviving node. The V-Series, however, does have one advantage over SVC. Since write cache is also protected through battery-backed local NVRAM, it isn’t disabled during failovers. This is crucial as the NetApp virtualization layer requires write caching to function.

Non-Disruptive Software Upgrades: V-Series also supports fully non-disruptive SW upgrades in much the same way as mid-range arrays. Single nodes are upgraded and then rebooted while the partner node takes over I/O processing. Like SVC, upgrades should be performed during non-peak load times as 50% of processing resources will be unavailable during the upgrade process.

Non-Disruptive Hardware Upgrades: Unfortunately, NetApp does not yet have this capability. While migrations aren’t required to upgrade from one version of the hardware to the other, customers should expect a short downtime window for the entire cluster as hardware is upgraded. This type of array-wide scheduled downtime can be a real burden for some customers who require non-stop operations. Data ONTAP cluster-mode is destined to help this problem, however it doesn’t support FC-SAN today.

EMC VPLEX

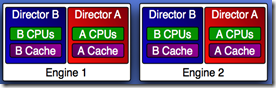

Redundancy: VPLEX has a leg-up on SVC and NetApp with its clustered architecture. Similar to the competition, each engine is based off two redundant directors. Unlike the competition, however, redundancy is also maintained between engines. This allows for cascading failure protection of multiple directors or even entire engines. VPLEX Metro uses write-through caching so there is no risk of loss of cached data during node failure. VPLEX Geo uses write-back caching—the distance between clusters would add too much  latency for synchronous writes. VPLEX Geo mirrors write cache between director peers to provide data durability in the event a director fails. Write cache and distributed cache are offloaded to SSD in the event of power failures to protect the contents.

latency for synchronous writes. VPLEX Geo mirrors write cache between director peers to provide data durability in the event a director fails. Write cache and distributed cache are offloaded to SSD in the event of power failures to protect the contents.

Performance: In a multi-engine cluster, it is best practice to configure host MPIO across directors in 2 or more engines. Proper planning can reduce the total impact of a director loss by having host I/O redistributed across the surviving directors. This can have less of a performance impact during director failures when compared to the SVC and V-Series. Since V-PLEX Metro configurations already use write-through caching, there is no change in this behavior during director failures. It is unclear the method VPLEX Geo configurations use to protect new write-backed data during director failures—is write-cache re-mirrored to surviving directors, or does it switch into write-through mode which could cause significant performance concerns for long distances?

Non-Disruptive Software Upgrades: VPLEX supports fully non-disruptive SW upgrades by splitting pairs of directors (As and Bs) and performing upgrades on all A directors and then all B directors. This would restrict available I/O by 50%, however—like other solutions—it would most likely be planned during non-peak load times. One significant limitation during software upgrades is the requirement that distributed volumes be disabled and only accessible from one location during the upgrade. This could be considered disruptive, depending on the configuration, but again, this is only for distributed volumes.

Non-Disruptive Hardware Upgrades: This does not seem to be supported today, however the VPLEX architecture should be suited towards allowing non-disruptive HW upgrades in the future. There is no documentation I could find indicating it was either supported, the process by which it would be performed, or if it was in the future plans.

HDS VSP

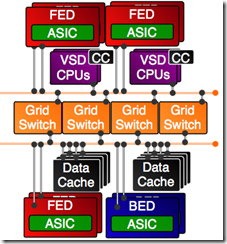

Redundancy: The HDS has some of the most sophisticated HA capabilities across all competitors in this space. The hybrid x86/Monolithic architecture enables a fully active/active, shared everything platform that can sustain multiple cascading failures. Active/Active pools of Front-End Directors, Back-End Directors, Virtual Storage Directors and Cache Adapters are all fully meshed by a redundant set of Grid Switches. As long as one of each of the above components survive, data access will not be interrupted. Write  cache is protected through mirroring between 2 separate Cache Adapters and offloaded to SSD during power failures.

cache is protected through mirroring between 2 separate Cache Adapters and offloaded to SSD during power failures.

Performance: During component failures, the surviving components share the workload. So, a loss of 1 of 4 VSDs would result in 25% less total VSD performance. One of the advantages of this configuration is that the loss of one component won’t take other components offline. For example, a CPU failure on a SVC, VPLEX or V-Series that resulted in the loss of a node or director would take offline with it the caching and connectivity resources of that node or director. The VSP architecture limits the failure domain to only the failed components which, in turn, limits loss of performance during component failures.

Non-Disruptive Software Upgrades: Like the other platforms, VSP supports fully non-disruptive SW upgrades and does so with far less disruption than competing solutions. This is partly because the microcode runs on CPUs that are separate from the Front-End and Back-End I/O processing CPUs, which enables for a very smooth upgrade process (No entire node or director reboots required). In most cases, host MPIO software won’t even see paths go offline, which gives VSP the least overall application impact during SW upgrades of any of the competitors.

Non-Disruptive Hardware Upgrades: This is the most disappointing area of the VSP HA capabilities. VSP currently has no support for generational HW upgrades. This could seriously erode the labor cost savings of storage virtualization, by forcing customers to rip-and-replace the entire VSP platform—complete with business disrupting host-based migrations—every 5-7 years, when upgrading the platform to the next generation.

Next UP

Remember that external storage behind Storage Virtualization platforms are only as highly available as the platform they are virtualized through. This is why it is critical the solution you choose meets the end-to-end HA requirements of your business. As always, you are welcome to share your feedback.

In the conclusion post of this series, I’ll recap some of the strengths and weakness of each of the platforms and share what enhancements I think each will need in order to successfully compete in the future.

Dale Carnegie: How To Win Friends and Influence People

Dale Carnegie: How To Win Friends and Influence People Dave Ramsey: The Total Money Makeover: A Proven Plan for Financial Fitness

Dave Ramsey: The Total Money Makeover: A Proven Plan for Financial Fitness

Very informative blog. Excellent summary for a newbie like me. Keep blogging.