Final Round: Data Mobility and Solution Longevity

This post ends the series where I’ve been comparing appliance based deduplication with Data Domain vs. Simpana Native Duplication and explaining why I think native deduplication is the best approach. If you haven’t read the earlier posts in this series, they can be found here:

Backup data can exist for a long period of time, in some cases forever. It is also well known that the longer you retain data on deduplicated media, the greater your deduplication savings. These reasons make deduplicated media, such as is available with Data Domain, an excellent target for long term storage of backup and compliance archive data. However, this also means that the data management strategies we pick should be designed for the long term (5-7 years at least).

Hardware Is Interchangable…or Is It?

Migrating hardware has notoriously been painful. In my previous job as a Storage Administrator, we would spend great portions of the year just migrating applications from one storage system to another. With the invention of server and storage virtualization, much of that has changed in a very good way. Virtualization allows hardware to be more agnostic and easily swapped out as it ages or as vendors need to be replaced. In the backup world, where data may need to be retained for years, it is important that we have a solid, long-term strategy for dealing with these hardware refreshes or vendor changes.

The good news is that Data Domain does support replication between devices. An important feature is that it allows the data to be replicated—or in this case migrated—in deduplicated form. For this reason, it should be easy enough to replicate your backup data to the new device, switch over the backup application access paths and then decommission the old device. This migration/upgrade path works well as long as both you and EMC are committed to Data Domain in the long run.

Migrating deduplicated data off dedup appliances, however, is extremely difficult. Let’s say you have 100TB of data you have kept for 3 years of a 7 year retention. Fortunately, through deduplication you’ve achieved a 95% deduplication ratio and you are only storing 5TB. However, the maintenance on your Data Domain expires. Suddenly, *shock* you are no longer getting that great pricing you first received for a new appliance and EMC refuses to budge (I’m sure this never happens). Let’s also say that you’ve found another dedup appliance vendor or decided that the native dedup approach is much more affordable or unlocks valuable capabilities not available in the appliance approach. Moving to another approach would require a migration.

The first major hurdle in the migration is rehydration. Even though your data was deduplicated down to 5TB, you would have to migrate the entire logical set—100TB. This is because the deduplication occurs within the appliance so only Data Domain can replicate this data in deduplicated form. Also remember, the read speeds of deduplicated data are significantly slower than the backup throughput—so much so that they are not published. Such a migration, using impaired read speeds that must copy the full 100TB logical set across a backup infrastructure already loaded with non-deduplicated backup traffic, could take weeks. Imagine when it becomes petabytes…

This problem has lingered for years with tape. But, unlike disk, tape has no maintenance. So it was perfectly acceptable to store it in a cave until the retention expired and not worry about a migration. Disks fail and must to be replaced or you could lose the whole dataset. So you either pay maintenance for the remainder of the retention, go through the painful migration or pay the premium for the upgrade and stick with the Data Domain hardware. The more your data grows, the more difficult the migration becomes and the more “locked-in” you are to your vendor.

Native IS Future-Proof

Since the Simpana platform leverages any type of disk or tape to begin with, it makes it easy to migrate between vendors. In a similar scenario, lets say you were using Simpana Native Dedup on an EMC CX and wanted to migrate to an IBM XIV. Simpana, understanding the deduplication layout, would migrate only the 5TB of deduplicated data, making the migration much easier to handle.

Since the Simpana platform leverages any type of disk or tape to begin with, it makes it easy to migrate between vendors. In a similar scenario, lets say you were using Simpana Native Dedup on an EMC CX and wanted to migrate to an IBM XIV. Simpana, understanding the deduplication layout, would migrate only the 5TB of deduplicated data, making the migration much easier to handle.

Native dedup customers will be in a great position to let a variety of hardware vendors compete for the best price rather than being cornered and potentially faced with lose-lose decisions. Unlike deduplication appliances, migrating between hardware vendors also won’t require new training for Backup Admins, as Simpana Native Deduplication is agnostic to hardware change.

Simpana also natively supports deduplication to tape. This means multi-year compliance backup or archive sets can be deduplicated on tape and stored on that maintenance free media without a forced migration every few years. When it is time to migrate data from old media to new, Simpana makes this easy too with a media refresh capability built into the platform.

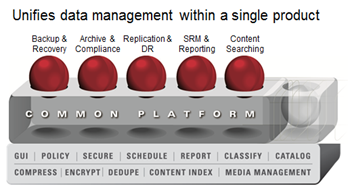

The final consideration for Simpana Native Dedup is around future capabilities. End-to-End deduplication is a core part of the Simpana platform. There are a lot of great capabilities in v9 that leverage deduplication and I’m sure many future capabilities will leverage the technology in clever new ways. By choosing an appliance approach today, you may limit your ability to take advantage of new features built on native deduplication as the platform continues to evolve.

Final Round Results:

As I’ve said before, Data Domain is a solid appliance and the question of this series wasn’t so much about whether it it a good approach as much as it was about whether Simpana Native Deduplication is a better approach. If you are on a legacy backup product today and you absolutely can’t stomach a change in software, then Data Domain might be a good fit. However, if you are dissatisfied that your backup software is not a technological leader or growing weary of the high cost of deduplication appliances, Simpana is definitely worth a look. It could save you a lot in both time and resources over the life of your backup data.

So, what do you think of the series? Have I missed the mark? Have I left anything out or misconstrued the facts? Does this give you something to think about or help your decision in any way? I’d be interested in hearing your thoughts.

Dale Carnegie: How To Win Friends and Influence People

Dale Carnegie: How To Win Friends and Influence People Dave Ramsey: The Total Money Makeover: A Proven Plan for Financial Fitness

Dave Ramsey: The Total Money Makeover: A Proven Plan for Financial Fitness

Hello,

Thanks you a lolt for all these important information. This is a very good article.

Therefore, I think that when you have written this post, you were working in Commvault Company.

Since you have leave commvault, do you still think that simpaa is better than Datadomain ?

Thanks you again.

Mickael,

Thanks for your note. I do still like CommVault and I think it is a solid choice for a data protection and archive platform. I also think it has some of the best software-based deduplication available. Although, Data Domain does has a few things going for it that also make it a really great option.

First, Data Domain appliances just work. They are easy to configure and minimize the chance of human error. CommVault (and really any other software dedup vendor) have specific requirements to ensure proper working deduplication. Being unaware or skimping on either the hardware or design best practices can cause a lot of pain. CommVault is definitely more complex to setup properly.

Second, Data Domain appliances have native NFS/CIFS support. This gives them some flexibility as a direct dump location for database backups (such as RMAN) and also allows them to be used as an easy archive tier (providing deduplication ratios justify the extra cost). For Simpana, you would be required to use the oracle client (which is very robust) or, for filesystem capabilities, deploy the archiving agent to move files from a production filesystem into the CommVault archive. Both ways work, Data Domain just makes it easier.

The biggest thing CommVault has going for it is price. After investing in CommVault software there is minimal up-charge to enable deduplication. It doesn’t make sense to buy Data Domain in that scenario when CommVault is so much less expensive–especially when you consider you will be giving up many dedup-based CommVault features.

Now, outside of CommVault, I still think some of the other backup players struggle with software dedup and Data Domain is still a good fit there. For example, I haven’t found a customer yet who is happy with TSM deduplication. Appliance based dedup seems to be the best choice for certain backup software customers.

Thank you again for your note!

Thank you for your quick reply.

See you (in France perhaps).

Hi Mike, What are your thoughts around CommVault dedupe only able to run on x86 platforms when backing up Oracle VLDB environments running on AIX and Solaris servers over FC when the media agents are local to the DB servers? Would this introduce performance bottlenecks or scalability concerns?

Hi Ed,

Sorry for the delayed response–I meant to get back with you sooner. AIX and Solaris systems do still support source-based deduplication–however you are correct that MediaAgent deduplication is only supported through x86 platforms. While the backups should scale just fine due to the client performing deduplication before sending data, restores would be a consideration.

I understand what you are doing there from a LAN-free backup configuration and it has been a common practice in order to bypass 1GbE ethernet links and allow clients to backup and restore directly to tape of VTL systems. However I think that approach is getting more difficult with larger dataset sizes and the additional complexity with managing VTL or Tape systems. For systems that have demanding Recovery Time Objectives, I try to encourage customers to consider 2 approaches:

Snapshots/SnapProtect: There is a very strong trend in utilizing snapshots for a 1st recovery tier. Snapshots are quick to create and very fast to recovery when compared with all other data protection approaches. CommVault has been a leader in leveraging array-based snaps and includes a lot of integration for the creation of application consistent snapshots and about 90% of the storage systems out there. They also include the ability to proxy those snapshots to deduplicated disks while performing cataloguing of the contents.

10GBE to MediaAgents: With the recent cost reductions in 10GBE, it is very cost competitive with FC. If you can’t do snapshots, I would encourage you to consider doing 10GBE connectivity from your clients to the MediaAgents for the needed throughput, instead of loading a MediaAgent on the client itself. This keeps your data protection centralized and reduces the complexity of implementing VTLs just for LAN-Free disk backups.

Thanks again for your question. Let me know if you have more questions or if I can clarify the above.

Thank you for this great article!

Do you think an integration of a Data Domain in a Commvault backup environment would make sense?

Hi Metin,

Thank you for your question. CommVault really has a cost advantage which will discourage individuals from purchasing both CommVault and DD (Data Domain). For CommVault, deduplication is an incremental cost on top of the software and is usually included in the capacity based licenses. So, when you purchase hardware such as Data Domain that includes both hardware and dedup software and CommVault, you end up purchasing the dedup software twice. Data Domain is much more expensive than generic storage, such as an EMC VNX array with SATA capacity, which CommVault deduplication leverages.

That being said, Data Domain offers more flexibility as a multi-purpose storage device. You can use the appliance as deduplicated storage for generic CIFS and NFS archive or miscellaneous data. Some customers have also reported they have had better luck with the stability of Data Domain deduplication in large, complex environments and are willing to pay the premium for something they feel will require less administration and troubleshooting.

Some DBAs also prefer doing backups directly to a NFS Data Domain mount as opposed to backup software because they are more comfortable with native Database backup tools. With RMAN and Data Domain Boost integration, it makes DD more attractive to DBAs than ever. The approach works well, however it lacks a global reporting engine that CommVault provides. So admins would need to custom create a way to monitor and report on backup success and failures across each individual database server.

Thanks Mike.

very good analysis and useful info. Thanks again for sharing.

T.Vijayan

Hi Mike…

Thanks for sharing such a deep thought, its really a great information. How the dedupe works and the trends.

Thanks

Gopi

Great write-up!. Does commvaults solution work with Amazon AWS? I am looking to setup data dedupe using commvault instead of data domain and push it to amazon aws.

Cheers,

CommVault does support Amazon S3 although I’m not sure how many customers leverage it. I have setup Nirvanix cloud connectivity in my lab before and configured it as an aux-copy for my backup data. It worked well.

I have heard some rumblings about high-turnover data causing issues with CommVault’s cloud storage dedup in that it generated higher storage consumption over time. I’m not sure if they have quite figured out granular aging of data in the cloud. In my opinion, it would be best used for long term archives of backup data, but not for–say–30 day retention backups. Discuss with your local CommVault SE.

As a general rule, cloud storage is probably best used for archive data, not DR. It doesn’t matter what software is used. Most business would not be able to tolerate restore times from the cloud for critical applications.

I have the setup of moving all my remote office backup to AmazonS3 using Commvault and it is working good.

Though not fast as the traditional backup as it rely on bandwidth speed but still fairly good.

Mike,

Great article. We’ve been a long time CommVault customer primarily with tape and are now moving to a disk backup solution. We’re also a heavy VMware environment. We are considering staying with CommVault and adding disk to an existing SAN or moving over to EMC and deploying Avamar / DataDomain or Networker/DataDomain. We may have to spin out to tape as well and from what we’ve seen Avamar is not very well designed for this. With VM servers as the source using VMware VADp / proxies for backup how well does CommVault deduct stack up against an all EMC solution. Thanks again.

Hi Tom,

Thanks for the comment and the question. I still think CommVault is a great solution and has a lot of integration points for disk, tape and cloud. It also has great support for applications, array-based snapshots and virtual machines. I do think EMC innovation has fallen a bit behind current needs and they have a tendency to bundle multiple technologies (avamar, networker, data domain) instead of providing a more integrated one that is easier to manage. I’m sure some would disagree.

If you want to compare CommVault to alternatives, you may consider companies like Veeam, Cohesity and Rubrik. Veeam has tape support, but only supports virtual servers. Cohesity and Rubrik both support cloud-based archiving instead of tape. I would strongly encourage you to consider cloud as an easy alternative to tape that is more future-proof. While it may be easiest to stick with CommVault (still a solid option), I think you will learn a lot in researching these other players who also provide solid and innovative options.